Posts

Some initial thoughts on my action research design as I get ready to write up the study methods and timeline:

- Since I already have data to look through, I'm starting to focus in on a mixed method study, looking at past data and teacher feedback to plan out future sessions for comparison.

- Since we have data to start with, I'm planning on an exploratory mixed-method design.

- I think exploratory is more beneficial in the long run because I'm interested in mechanisms and structures which increase implementation of ideas by teachers, not just explaining why they do or don't implement.

- We're finishing workshops this year and already planning for summer work. If I can identify some patterns and structures and correlate the level of implementation, we'll have a good starting point for aligning all PD, not just my teams, to the new structures using data-backed conclusions.

- Given the timeframe, gathering consent forms right now is difficult, considering we're coming up on spring break and the testing windows. Doing aggregate, anonymized data analysis will allow us to draft a descriptive letter before the summer PD series begins and we can make informed consent a part of the workshop instead of a mass email.

I'm in the midst of an action research course and my topic is evaluating and reflecting on our systems of PD in the district. This post is the literature review I did as part of the research process. This is similar to some of the work I did last year on leadership development and PD and those links to related items are at the bottom of this post.

“Professional development” as a catch-all for staff training has a degree of uncertainty associated which clouds our ability to critically discuss and reflect on programming. As an instructional team, we have not taken time to critically assess and address our effectiveness in presentation or facilitation nor have we done any work to gauge the effectiveness of professional development in changing teacher practice.

In Elkhart, we have worked mainly with self-selected groups of teachers as technical coaches according to the definition provided by Hargreaves & Dawe (1990). Though our sessions contained collaborative elements, they were singularly focused on developing discrete skills to meet an immediate need. As a team, these have been effective in closing a significant digital teaching and learning skill gap present in the teaching staff. We have not, to date, considered specific models of professional development as a mechanism for planning or evaluating the effectiveness of workshops offered in a given school year.

According to Kennedy (2005), comparative research exploring models of professional development is lacking. Her analysis and resulting framework provides helpful questions when assessing and determining the type of offerings for staff. Reflective questions range from the type of accountability organizers want from teachers to determining whether the professional development will focus on transformative practice or serve as a method of skill transmission. It is tempting to always reach for models which support transformative practice, but there are considerations which need to be made for those structures to be truly transformative.

As a district, our efforts have centered on active processes with teachers, but this has been done without an objective measure of what those types of programs actually look like in practice. Darling-Hammond & McLaughlin (1995) summarize our working goal succinctly: “Effective professional development involves teachers both as learners and as teachers and allows them to struggle with the uncertainties that accompany each role,” (emphasis mine). Struggling with uncertainties requires some measure of collaboration, but collaboration alone does not necessarily lead toward transformative ends and can even drive top-down mandates to improve palatability (Hargreaves & Dawe, 1990).

To structure collaborative development opportunities, Darling-Hammond & McLaughlin (1995) make a case for policies which “allow [collaborative] structures and extra-school arrangements to come and go and change and evolve as necessary, rather than insist on permanent plans or promises.” This counters many district-driven professional development programs which require stated goals, minutes, and outcomes as “proof” of the event’s efficacy and resultant implementation. The problem with these expectations is that truly collaborative groups are constantly changing their goals or foci to meet changing conditions identified by the group (Burbank & Kauchak, 2003).

In response, a “Transformative Model” (Kennedy, 2005) attempts to move beyond a simple “collaboration” label and build a professional development regimen which pulls the best from skills-based training to into truly collaborative pairs or small groups attempting to make changes in practice. She argues that transformative development must consist of a multi-faceted approach: training where training is needed to open spaces when groups need time to discuss. All work falls under the fold of reflection and evaluation of practice in the classroom. Burbank & Kauchak (2003) modeled a collaborative structure with pre-service and practicing teachers taking part in self-defined action research programs. At the end of the study, there were qualitative differences in the teachers’ responses to the particulars of the study, but most groups agreed that it was a beneficial process and they would consider participating in a similar structure in the future. Hargreaves & Dawe (1990) alluded to the efficacy of truly collaborative research as a way to combat what they termed “contrived collegiality,” where outcomes were predetermined and presented through a “collaborative” session.

Collaboration as a means alone will not change practices. Hargreaves and Dawe’s (1990) warning against contrived collegiality is characterized by collaborative environments with limited scope “to such a degree that true collaboration becomes impossible”. Groups working toward a shared goal of transformative practices is undercut when the professional development structures disallow questioning of classroom, building, or district status quos. If collaborative professional development groups are allowed to “struggle with the uncertainties” (Darling-Hammond & McLaughlin, 1995) present in education both in and beyond the classroom, the group will be more effective in reaching and implementing strategies to improve practice. This view subtly reinforces Hargreaves & Dawe’s (1990) perspective that collaboration must tackle the hard problems in order to have a lasting impact.

There are several other factors identified which contribute to the strength and efficacy of professional development. These range from continuous, long-term commitments (Darling-Hammond & McLaughlin, 1995; Hargreaves & Dawe, 1990; Richardson, 1990), work that is immediately connected to classroom practice (Darling-Hammond & McLaughlin, 1995; Richardson, 1990; Burbank & Kauchak, 2003), and a group dynamic which recognizes the variety of perspectives which inform teaching habits across a wide spectrum of participants (Kennedy, 2005).

As an instructional coach, one of my core responsibilities is to help create a culture of learning amongst members to mitigate division or power dynamics based on experience (Darling-Hammond & McLaughlin, 1995; Burbank & Kauchak, 2003), which is particularly evident in mixed-experience groups. In addition to fostering a strong group dynamic, the instructional coaching role becomes facilitative rather than instructive to help teachers address problems of practice (Darling-Hammond & McLaughlin, 1995). It is easy to fall into an technical coaching position in collaborative groups, but such a role reduces the chances for transformative work to emerge as teachers become trainees rather than practitioners (Kennedy, 2005). This becomes more apparent as districts add instructional coaching positions, but limit the scope of the role to training sessions under the guise of “encouraging teachers to collaborate more…when there is less for them to collaborate about” (Hargreaves & Dawe, 1990). Ultimately, the coaching role is most effective when it is used to support teachers through “personal, moral, and socio-political” choices (Hargreaves & Dawe, 1990) rather than technical skill and competence.

In order to fully reflect upon and evaluate our programming, Kennedy’s (2005) framework for professional development will serve as a spectrum on which to categorize our professional development workshops and courses. Hargreaves & Dawe (1990) also provide helpful reflective questions (ie, are teachers equal partners in experimentation and problem solving?) to evaluate just how collaborative our “collaborative” groups are in practice. Once our habits of working are established on the framework, we can address shortcomings in order to build toward more effective coaching with the teachers in the district.

Resources

Burbank, M. D., & Kauchak, D. (2003). An alternative model for professional development: Investigations into effective collaboration. Teaching and Teacher Education, 19(5), 499-514. doi:10.1016/S0742-051X(03)00048-9

Darling-Hammond, Linda, and Milbrey W. McLaughlin. "Policies that support professional development in an era of reform." Phi Delta Kappan, Apr. 1995, p. 597+. Biography In Context, http://link.galegroup.com.proxy.bsu.edu/apps/doc/A16834863/BIC?u=munc80314&sid=BIC&xid=abd8b6f2. Accessed 5 Mar. 2019.

Hargreaves, A., & Dawe, R. (1990). Paths of professional development: Contrived collegiality, collaborative culture, and the case of peer coaching. Teaching and Teacher Education, 6(3), 227-241.

Kennedy, A. (2005). Models of continuing professional development: A framework for analysis. Journal of in-service education, 31(2), 235-250.

Richardson, V. (1990). Significant and worthwhile change in teaching practice. Educational Researcher, 19(7), 10-18. doi:10.2307/1176411

Here's a presentation I did for a class about a year ago over similar themes, but with a leadership spin.

The featured image is by Jaromír Kavan on Unsplash.

From a post last week where I continued to refine my research question:

How does continuity of study (ie, a PD sequence rather than a one-off workshop) affect implementation?

Is there an ideal timing? How often (in a series) seems to be effective?

What does the interim look like in between workshops?

Are volunteers more likely to implement training? Or are groups, even if they're elected to come by leadership?

How does the group dynamic affect buy in or implementation after the fact? Would establishing norms at the outset remove stigma?

I thought I was going to use, "How can my role effect change through professional development?" which isn't a great question for research. It's good for reflection, but it's too specific to me and not great for sharing in a collaborative environment (my team, for example).*

*

Based on some of my literature research, I'm going to broaden back out to generalizing PD structures as a practice rather than focusing on my own role within those structures. Right now, I'm thinking:

How will aligning our professional development programs to goal-oriented frameworks affect implementation by participants?

I'm feeling good about this question for a few reasons:

- Much of my day to day work is with individual teachers. They often have a larger focus and I spend my time helping those teachers find solutions or methods to reach those goals.

- I am involved in building-level discussions through departments or administrators. It isn't as frequent as one-on-one contact with teachers, but I do work with administrators to help their staff reach collective goals.

- My team is housed at the district level, not individual schools. My involvement at the highest level eventually trickles down to buildings and individual classrooms.

We've never done a full, research-based survey on the PD activities we offer in order to evaluate whether or not our work is effective in changing instruction at any given level. Using academic research for a guide, we can begin to evaluate and categorize our work in view of larger goals. Hopefully, we are able to identify patterns, strengths, and weaknesses as individuals and as a team as we begin planning for next year's programs.

This is a copy/paste of a post I wrote in a graduate class. I'm posting it here so I can get back to the ideas after the blackboard course finishes.

I'm still working on my lit review and I've come across two articles that propose classifications of types of PD typically offered in school. Hargreaves & Dawe (1990) discuss "coaching" as a larger construct. The term has been used more recently (even my title has "coach" in it) but it's been poorly defined in terms of the job description and my actual, day to day work. The authors cite Garmston's (1987) model, which defines structures: technical, collegial, and challenge coaching. Hargreaves & Dawe describe each model and then evaluate its effectiveness in changing school culture. The article is timely because I'm asking similar questions as I reflect on my own work with teachers.

The other helpful article (Kennedy, 2005) I found provides a framework for analyzing and qualifying nine models of professional development and proposes a structure for analysis of effectiveness with teachers. Categories align with Hargreaves & Dawe and provide more nuance in determining the type from a teacher's perspective rather than the coach's.

Two do not represent a statistical sample, but both articles reach similar conclusions nearly 30 years removed from one another. Development for teachers must include reflection not only on individual practice but processing the political and power structures in place on the teachers and their functioning within those structures. Challenging the status quo through methods like peer review, paired or collaborative action research, or even something more elaborate like instructional rounds, is critical if lasting change is going to take effect.

Hargreaves, A., & Dawe, R. (1990). Paths of professional development: Contrived collegiality, collaborative culture, and the case of peer coaching. Teaching and teacher education, 6(3), 227-241.

Kennedy, A. (2005). Models of continuing professional development: A framework for analysis. Journal of in-service education, 31(2), 235-250.

I started a series of professional development workshops with teachers this week. It's a series of half-day work sessions with full departments and I'm focusing on active learning and assessment techniques all centered on literacy within the content area. It's really a part two to a full-day conference we held for teachers earlier this month and my task (and goal) is to make sure teachers are equipped with the how *after hearing the *why at the kickoff.

My original question was framed as a negative: Why don't teachers implement learning from professional development? I think this has an inherent bias, assuming that teachers don't try to use what they've learned. Based on my work this week (and looking ahead), there is definitely a desire to do things and it seemed that the lack of planning time with colleagues was a bigger cause of inaction than not trying.

I'm going to adjust my question: How can my role effect change through professional development?

I want to move away from what other people do to how I can help impact their habits through strong professional development. I'm still not thrilled with the wording, but I'm interested in what structural components make a program effective when it comes to implementing ideas. To start, I brainstormed some gut feeling indicators and questions that (I hope) will guide some of my research.

- Relationships: I know my teachers and they trust me and my instruction.

- Instructional focus: Everything I do has an instructional lens or context. I do not rely on technology gimmicks to increase buy in.

- Application: All of my workshops bring a heavy focus on in-the-classroom application of ideas through modeling or case study examples.

Some other related questions:

- How does continuity of study (ie, a PD sequence rather than a one-off workshop) affect implementation?

- Is there an ideal timing? How often (in a series) seems to be effective?

- What does the interim look like in between workshops?

- Are volunteers more likely to implement training? Or are groups, even if they're elected to come by leadership?

- How does the group dynamic affect buy in or implementation after the fact? Would establishing norms at the outset remove stigma?

The featured image is IMG_6750, a flickr photo by classroomcamera shared under a Creative Commons (BY) license

I spent some time last week running through some "why" loops to hone in on reasons behind my potential research question. I think the question is broad enough to allow for several avenues of exploration, but it was insightful to run through the cycle several times (below). We've actually used this mechanism as an instructional coaching team in the past and being familiar with the process helped me focus on larger issues. Granted, some of the issues contributing to some of the behaviors we see are well beyond my specific purview and definitely outside the scope of my AR project.

Below is a straight copy/paste of my brainstorming. I think items two and three are most within my realm of influence. I can use my time to focus on teachers who have recently participated in PD to help provide that instructional support. I can also work proactively with principals, helping them follow up with their staff members learning new methods or techniques and recognizing those either with informal pop-ins to see students in action or public recognition in front of their staffmates.

Why don’t teachers implement the training they’ve received in PD?

- Teachers don’t put their training into practice

- There are good ideas presented, but no time to work on building their own versions.

- The PD was focused on the why, not enough on the how

- Teachers don’t understand why they need to change practice

- The district’s communication about the offered PD is lacking clarity

- There is a lack of leadership when it comes to instructional vision.

- Teachers do now show evidence of putting training to use with students.

- Teachers don’t know how to implement ideas they’ve learned in the workshop

- There are so many demands on their time, planning new lessons falls to the back burner

- In-building support systems are lacking

- The district is strapped for money and hiring instructional coaches isn’t a priority.

- Teachers do not put learning from PD into practice.

- There is no outside pressure to implement ideas learned in training

- Principals are spread too thin to pay close attention to inservice teachers are attending

- Principals do not know what to look for after teachers attend inservice.

- Teacher evaluations are based on outdated expectations and promote superficial expectations.

- Teachers do not communicate implementation of learning

- Workshops in the district are often standalone with no formal structure for long term support

- The resources committed to PD for several years were focused on one-off training

- The district lacked a vision for teacher development as a continual process

- District leadership did not see the value of instructional support as a formal position in the district.

- Teachers do not implement learning from workshops

- No one follows up on the learning from the PD

- There was no formal method for recognizing PD

- There is no formal expectation of implementation from supervisors (principals, etc)

"Loop" by maldoit https://flickr.com/photos/maldoit/265859956 is licensed under CC BY-NC-ND

This is an eye-opening look at the teacher-as-content-creator world of classroom DIY. Two quotes jump out that highlight the broken legislative system we have and the broken social media expectations we set for ourselves.

Teachers need their content to perform well and often, it’s the pretty, palatable posts that perform well; so that’s what teachers feel pressured to deliver.

As long as teachers continue to share online tips and tricks for shielding their students from the threat of gun violence, as if they were simply sharing book recommendations or math worksheets, in lieu of explicitly demanding gun reform, teachers will continue to carry the overwhelming burden of keeping their students alive in deadly situations.

Source: School Shootings and the Cheerily Gruesome World of DIY Classroom Prep

Running PD for an entire district is a challenge. The biggest gap I see is knowing how or when teachers actually use what they've learned in a session or a series of sessions. We have automated systems in place, but it doesn't give us information on the effectiveness of our instruction.

We coach our teachers to check for understanding and watch for application of learning with their students, yet this is something I have not done well with the teachers I work with. Granted, I work with all five secondary buildings (and teachers in general with my partners), so geography and time are a challenge in gathering and collating the right kind of information.

I'm interested in what kinds of supports we provide will help teachers actually use what they've learned. We run several programs, but which ones are the most effective at engaging and enabling our teachers to make changes to their teaching? What kinds of environments or availabilities are the most helpful to the staff?

Homing In

I haven't defined a specific question yet, but several I'm thinking about include:

- How long do teachers wait before implementing training they've received from the district?

- What professional development structures or systems best enable teachers to implement skills or strategies learned in professional workshops?

- How does student engagement or learning change as a result of a specific instructional change by a teacher after attending a training event?

- What are the reasons teachers do not put strategies or systems in place after a workshop?

- Do professional development workshops make an impact on day to day instruction by the teaching staff?

My main concern is that several of these questions are very subjective. Measuring the result - either quantitatively or qualitatively - will be difficult and rely on select groups of teachers self-electing an evaluation tool. We already send a basic implementation survey to teacher three weeks after an event, so my intent is to go through all of those records and begin to identify the response rate as well as the most common responses for implementation vs non-implementation by teachers. I'm also hoping to gain some candid insight on the state of our professional learning opportunities from teachers' perspectives.

I'm helping several teachers move toward standards-based grading practices this year. We work a lot on philosophy - why they'd want to use this grading mechanism over traditional scores, how to support learning, and the language of SBG in general with students - before we get into the how-to. That helps make sure everyone is in the right frame of mind.

Once they're ready to start, that's where the how-to work comes in. I know what I think about how to set up a class, but there is no gold standard when it comes to actually running the class. If you're looking to start, allow me to redirect you to Frank Noschese and his excellent blog as well as pretty much anything written by Rick Wormeli.

Today's post started as an email asking how I handled retests in my class. The following is more or less what I wrote back, with some edits for clarity and more general application.

I’m trying to up my standards based grading game. We briefly talked about this last semester, but I’m wondering...how can I most efficiently update students’ grades to show mastery when I’m having them do test corrections? Ideas welcome!!

This came in an emailDo you do paper-and-pencil corrections? How are you building your tests? I ask because there are a few ways you could consider, but each kind of depends on your own style and class processes.

Grading paper-and-pencil corrections

When I did this, it was usually something like:

- write out the wrong answer,

- write the correct answer,

-

- give a reference to the right answer,

- which standard/outcome does this relate to?

So, they would go through the material, evaluate their responses, and then find the right answer and justify it. I was mainly concerned with the justification of the response, not so much that they found the right answer. I would grade their mastery on that justification, bumping them up or down a little bit.

To track it, you could download the MagicMarker (iOS only) app and mark them on Outcomes as if you were talking to them in class. It aggregates those scores into the Canvas Learning Mastery grade book and then you can evaluate the overall growth rather than give credit based on that one assessment.

Question Banks

This is definitely the most time consuming to set up, but once it's set up, you're golden. Getting questions in standards-referenced banks allows you to build out Quizzes that pull randomly, so you can give a retake or another attempt that updates those Learning Mastery grade book results. This is what I tended to do instead of paper/pencil once I had everything going.

Students would get their results and then focus on any standards that were less than a three in their Learning Mastery grades (out of four total). There'd be some kind of work involved so they weren't blindly guessing, but then they could take the test again because the questions were likely to be different with the bank setup.

Set up banks based on standard and then file questions in there. When you build the Quiz, you use Add new question group rather than Add question in Canvas. You can link the question group to a question bank and specify how many items to pull at X number of points.

Student defense and other evidence

This one is probably my favorite: just giving students a chance to plead their case...a verbal quiz, essentially. I'd use MagicMarker while we were talking to keep track of their demonstration. I would ask them to show me work we'd done, explain how they know what they know, and then prod them with more questions.

I typically did this if they were having trouble demonstrating understanding in other ways. I wanted to remove test anxiety or reading comprehension from the equation, but this was typically the last option for those kids. I'd then work with them to get over those test-taking humps (granted, this was more important to do in the AP class because they had to take the test and I needed them to be ready for it).

I think all of this boils down to get more data into Canvas (or your LMS if you can)...try not to rely on a single demonstration to judge understanding. My goal was to have students show mastery on standards by the end of the semester. So, if they're not getting one of them now, it still goes in as a zero but it serves as a reminder that they still have to do that standard. I was updating grades on the last day of the semester for my students. It's a weird way for them to think and it'll take some prodding by you so they don't forget that a zero can always convert to full credit. Usually what happens is a later unit will give them more context for whatever they're struggling with and cycling back after more scaffolding is more effective than trying to drill the issue immediately, if that makes sense.

If you're not using Canvas, there may be similar systems in your LMS that will help you track growth. I also have a Google Sheet template that you can use to track student growth. Shoot me an email if you'd like that and I'd be happy to send it along.

I'm taking a graduate course this semester on action research, part of which is defining and designing a question to tackle. Most of the coursework relates to classroom-level research by teachers to drive reflection and instructional change, but I'm not in the classroom right now. I'm thinking through what kind of teacher-focused research could help me in a coaching role.

- Can reflection be an emergent property in teaching given the right context to grow?

- How can formative data push teachers toward ideas in contrast with what they think is the "best" instructional habit?

- How do PLNs (local or digital) change teacher practice?

- What conditions are favorable for teachers starting - and completing - PD regimens?

- Are mixed-format (online, self-paced, in person) PD sequences more or less effective than single-format (single sessions)?

- What kind of follow up intervention or touchpoints can spur implementation of methods or ideas learned in professional development?

- How do student results from trying new methods impact the type and frequency of PD offered to teachers?

- How does implementing a new lesson or instructional method impact teacher satisfaction or overall morale?

This definitely isn't exhaustive, but it's a start. There are some others floating around my head that I can't quite verbalize yet. Much of what I'm interested in surrounds teacher intent to join PD, their actual attendance, and then, most importantly, their implementation of the methods and techniques learned together. What kinds of prompts or supports are needed to ensure follow through?

At face value, it seems collaborative action - longitudinal groups of teachers - working together has a high impact on implementation. But, given time constraints (including perceived time restrictions) on the part of teachers, this is hard to get off the ground at a systemic level during the school day.

The district as a whole is ripe for this kind of problem solving. Department and cross-department PLCs are forming and they are given freedom to choose how to spend that time. Perhaps a good way to start is to identify a team at each building willing to go through a more formal process. While their focus is on student improvement, I'm more interested in the supplemental activities I can provide as a coach to develop the action research mindset of the teacher.

Featured image from *`Unsplash <https://unsplash.com/photos/1NyiWD3iorA>`__* by David Papillon

If you work in big systems, sometimes you come across a situation where you want to share a single tab of a Google Sheet with someone else rather than the master copy. An easy way is to just make a snapshot copy of that tab and share a new sheet with them. A more advanced method is to use a parent/child relationship and some Sheets cell formulas to share an always-up-to-date copy of the master data. The problem with that (there's always a problem, isn't there?) is that it only copies the data, none of the notes or any other information that might be on the master sheet.

In this post, I'm going to give an example of a Google Apps Script that can be used to copy notes from a master parent sheet to a child spreadsheet. If you want to make a copy of a folder with working examples, ask and thou shalt receive.

The Basic Setup

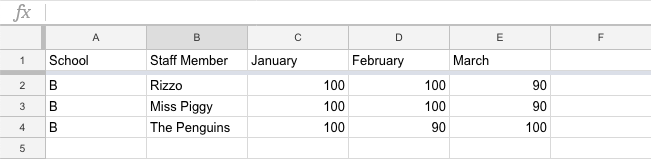

For this example, we have a master Google Sheet with several rows of data organized by location, like this:

We want to share child sheets tied to schools A, B, and C with only their relevant data. This first part is done with query and importrange in the following formula in cell A1 of the child spreadsheet.

=query(importrange("masterSheetURL","Sheet1:A:E"),"SELECT * WHERE Col1 CONTAINS 'A'",1)

Query selects an entire row where Column 1 contains the string 'A'. If this were a real situation, the building name would be the imported data, which looks like this:

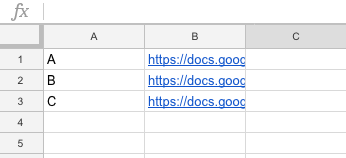

I prefer query because I don't have to select a specific range - it will look at the entire sheet for that data. If you don't want to import notes, this works really well. If you do want to import notes, make sure data is grouped together because you need to determine some offsets when writing to the child sheets. Create a second spreadsheet in masterthat has the following structure:

where column A is the building (or location or other selector) and the URL to the child sheet you want to update. Column C will become very important as it holds the offset data for writing notes to the child sheet.

The Script

Copying notes can only be done with the .getNotes() Google Apps Script method. This script looks at the sheet and uses a couple of loops to build arrays to post to the child sheets. The first challenge is to set the offsets. Notice location B in the master sheet is rows six through eight, while on the child sheet it is rows two through four. Without setting an offset, our notes would be written into the wrong rows, and that's no fun.

After running that script, your child sheet will update the offset of each building in Column 3 of the helper sheet in the master copy. I also added an onChange trigger to this function so it runs when rows are inserted anywhere in the sheet.

Now, you're ready to copy notes from one sheet to another.

This script will loop the master spreadsheet and look for a building name it recognizes. If there's a match, it will open the child sheet and set the notes for each row using the offset provided in the previous step. To be honest, this isn't the most elegant solution, but hey, it works.

Just in case of catastrophe, here's another little utility script you can use to clear all notes from the child sheets. This was particularly helpful when I had my offset calculations off by a row.

This is a manual process - there is no edit or change event you can hook into when a note is added or deleted. So, I wrapped all of this into an onOpen simple trigger to add a custom menu.

There are definitely improvements that can be made. Here are all the files as a gist so you can clone, copy/paste, and hack away at your own sheets.

Tom Woodward has a semi-regular weekly roundup blog post with links to interesting things he finds on the Internet. A couple weeks back, he posted a link to something called arbtt (pronounced ar-bit? that's what I say, anyways), which is actually an acronym for "arbitrary, rule-based time tracker." In short, it runs in the background and samples all the open programs on your computer, once per minute, and writes that information to a text log.

It's super, super geeky. Like seriously. I've used todo-txt for almost two years now and I recently started tracking how long I work on a given task so I can keep better track of what I spend my time on. But, the catch is, I have to remember to do turn on the tracker. arbtt runs in the background. The data is standardized, so you can write different queries and poll for information in very, very granular ways.

It was a real pain to get set up. After two days of fussing on and off, I have it running well on my computer. The documentation for Mac OS is really lacking, so here's what I did for my machine.

(This is fairly technical, so jump to the bottom if you want to see what it does once it's running.)

Install

Installation wasn't too bad for arbtt on Mac. The install instructions on the project page worked fine, particularly because I already had Homebrew installed and set up. I'm not going to rehash those steps here. Go read them there.

Binaries

Getting the thing to run was a different story. arbtt installs itself at the User level on Mac OS in a hidden .arbtt directory. This holds the configuration file and the actual capture.log file.

The actual executables are in the .cabal directory (also under /Users/yourname) because they're managed by the package manager. The documentation says to go into .cabal/bin and run arbtt-capture in order to start capturing data with the daemon.

Well, that didn't work.

The files in .cabal/bin are symlinked to the actual executables, and from what I can gather, Mac OS doesn't like that. At all. So, to run the scripts, you have to call the absolute path to the actual binaries. Those are in .cabal/store/ghc-8.4.4/rbtt-0.10.1-*/bin. I don't know enough about package managers, but those binaries are buried. I ended up creating aliases in my terminal so I can use one-line invocation.

categorize.cfg

Because the collection of information is arbitrary, you can collect without knowing what you want to know, which is pretty cool. The syntax for the query rules is in Haskell, which I don't know, so mine is a little hacky right now. In my playing, there are two main rules:

- Target the current window with $program.

- Target the current window title with $title.

You can use standard boolean operators to combine queries to get specific information. Each query pushes to a tag object that contains a Category and identifier in a Category:identifier syntax. A query I'm using to watch my Twitter use is:

current window $program == "Vivaldi" && current window $title =~ [m!TweetDeck!, m!Twitter!] ==> tag Social:twitter

So, it checks for both the browser (I use one called Vivaldi) and the window title before pushing (==>) to the Social:twitter tag. Mine are all really rudimentary right now, but you can sort and filter by date, titles, even directory locations if you're working on your filesystem. Since the underlying data never changes, you can just write new rules that will parse with arbtt-stats (next).

arbtt-stats

The main capture daemon just runs in the background and collects data. The workhorse is arbtt-stats which parses all of the raw data. You can run arbtt-stats in the command line and get a full report of all matched rules or you can query by specific tag with the -c flag. So, executing arbtt-stats -c Social:twitter would return only the time recorded where Twitter was the focused window.

arbtt-graph (addon)

This all happens in the command line, which isn't super helpful, especially if you have a lot of results like this:

Filtering down by tag with -c is helpful, but it would also be nice to turn this into something graphical. That's where arbtt-graph comes in. It's a Python-based parser that generates a website based on arbtt stats for each day as well as a four-month aggregate.

The biggest problem I had with arbtt-graph was that python isn't super happy with relative file locations. I had to edit the scripts with absolute paths to write and read all of the necessary documents. It's a fun little helper on my computer, and if I was insane, I might investigate putting it online somewhere with a cron job, but that's for another day.

I've never had a case for my Raspberry Pi because...well...I just haven't.

One of our schools recently purchased all kinds of STEM stuff and I've been spending some time learning how to use the 3D printers, drones, along with a bunch of Spheros. I've used the Spheros a bunch and while I've 3D printed in the past, I've not gone through the whole process by myself. Today was the day.

We have Flashforge Finder printers which, compared to others, are a breeze to use. They're compact, easy to maintain, and print well (with one exception, but I think the extruder is a little blocked). I grabbed a Thingiverse model for the sake of time and got started.

My biggest problem was using the wrong software. I grabbed Slic3r to do the print rendering because it's what I'd used in the past. But, for some reason, I couldn't get the print bed size to set correctly, so the prints were going off the edge. Then, I realize that Flashforge has their own slicer, and once I installed that, it was all good.

The bottom print file was up and off, no problem. The upper half of the shell caused me some issues because the print file had it oriented as if it were fully rendered.

I flipped it upside down and added a bigger print footprint and it was finally able to start running (after realizing an extruder was bad and changing machines).

Three hours later, I had two halves of a nice little case for the nice little computer.

Photo CC0 Public Domain by me

Photo CC0 Public Domain by me

This week, I co-taught an AP Biology class with one of our high school teachers. She was looking for a way to have students wrestle with the molecular structure of DNA, so I suggested a card sort activity.

[David Knuffke](https://twitter.com/davidknuffke) suggested the [Central Dogma sort from HHMI](https://www.hhmi.org/biointeractive/central-dogma-card-activity), but they weren't quite at the point of replication/transcription/translation. I ended up modeling its structure for our molecular sort. [Here's the Google Doc](https://docs.google.com/document/d/1Pv-iCEkZT09xGStWe6AL21tnYwT5wWJATrCqNTCQA0k/edit?usp=sharing).

This sort worked well for the students because they had already spent some time reviewing 3' --> 5' structure as well as the purine/pyrimidine relationship. Most of their work had been with simple block models at this point and I wanted to stretch them to think about the atomic interactions. We started by handing out just the pictures and giving pairs of students about three minutes to discuss and sort together. Here are three samples:

Each group was able to explain their rationale to one another and to the class. There are similarities between groups 1 and 2. I _really_ loved that group 3 was almost the exact opposite of the first two groups, mainly to illustrate to the class that the _process of thinking_ is the critical piece to the exercise, not necessarily the 'right order.'

After groups felt good about their initial order, I handed the terms out and asked them to label each step. Connecting the visual to the language of DNA structure and introducing the idea of replication left us in a good spot for the day.

No matter how often I do card sorts, I never cease to be amazed at how _deep_ conversations get as students are putting cards in order. I think that's the challenge - getting a good set of images or graphics in place which promote those conversations without leading to a 'right answer,' especially in the AP context.

[The Google Doc](https://docs.google.com/document/d/1Pv-iCEkZT09xGStWe6AL21tnYwT5wWJATrCqNTCQA0k/edit?usp=sharing) with the cards and terms is is public, so feel free to grab it for your class if you're interested.

I've been playing around with building websites and self-teaching myself (is that redundant?) web development languages. I feel like I've crossed some kind of line in the last 18 months where I can identify a problem, conceptualize a web-based solution, and then build it much more easily than I could in the past. I know that skill comes from experience, but it's nice to reflect back on my learning and see growth.

I built mostly for myself at the start. I run a simple [static homepage](https://ohheybrian.com) and a few small pages (see links in the right sidebar) for some small Google Apps Add Ons that haven't seen much love lately (shame on me). I've also kicked off a couple of projects for the district that have seen some daylight.

Most of this has been totally private. I would cruise [GitHub](https://github.com) from time to time for [interesting](https://github.com/bennettscience/tweetmineR) [projects](https://github.com/bennettscience/TimelineJS3) [to play with](https://github.com/bennettscience/gifify), but never really contributed to any of the projects.

Without getting too deep, Git tracks all changes made to files by anyone working on a project. It's terrifyingly complex, especially when you work yourself into an unsolvable tangle, but it does make seeing changes to code really, really handy. GitHub takes all of this information and puts it into one nice place for people from all over the world to see and work on together.

Contributing to a project on GitHub felt like walking into a room with a bunch of geniuses and asking what I could help with.

Recently, I came across a really cool project called [Lychee](https://lycheeorg.github.io/) which is a self-hosted photo sharing site. It runs on any web server and gives you a nice, clean tool to upload, organize, and share photos. [I downloaded and got it running](https://photos.ohheybrian.com) after a couple days of [poking around](https://blog.ohheybrian.com/2018/11/forget-the-mac-mini-bring-on-the-raspberry/). While I was using it, I noticed a small error: toggling a setting didn't work because of a wrong function name. I was able to look at the code, find, and fix the error on my own site within about 20 minutes.

Then came the scary part - submitting a fix to the problem.

I had done this only once, and it ended with the owner saying, "Thanks but no," which was a bummer and a little embarrassing. I was about to submit some code that would be used in a real, live app that people downloaded and installed on their machines. My name would be attached to this particular fix (git tracks who changes what). I was worried that my fix would magically not work as soon as I posted it.

All of this fear was built up in the _perception_ of what community-based projects require. Most of the anxiety I felt was because of the image of this world being closed off to people who could speak the language or do things perfectly (sidebar: this is true, especially in the [tech sector](https://www.recode.net/2018/2/5/16972096/emily-chang-brotopia-book-bloomberg-technology-culture-silicon-valley-kara-swisher-decode-podcast), and GitHub isn't immune. More on that later.). But, things break. Problems need to be solved. And I'd just solved one that could help someone else. And anyone can submit fixes to code, so I decided to [give it a try](https://github.com/LycheeOrg/Lychee/pull/112).

Nothing scary happened. The site manager sent me a quick thanks and implemented the code.

I just had a small bugfix pull request merged into a real-life open source project, so that's cool.

—Brian E. Bennett (@bennettscience) November 22, 2018

I started looking through some of the other issues on this particular project and noticed one that I felt like I could help with, even if it was just exploring possibilities. Someone wanted to be able to tag photos with Creative Commons licenses using a menu, not just throwing tags into the `tag` field.

Long story short, this was a much bigger problem. There were easily six to ten non-trivial changes necessary to make this update happen. Over the last four days, I've worked hard to learn about the app's structure, the languages used to make the whole thing tick, and how to implement this feature without breaking the entire site. Today, [that feature was added](https://github.com/LycheeOrg/Lychee-front/pull/18) after some back and forth on code structure with the main author.

I'm a hobbyist. I don't plan on going into development full time because I like teaching too much. But there are definite things I learned through this weekend's project:

- **Submitting requests to update an app is just that: a request**. I'd built up this idea that submitting code - even flawed code - to a project would be a waste of that person's time. But really, providing a starting point can help make progress on complex issues.

- **Take time to look at the culture around the project**. The first one I'd submitted to was a single guy who wrote a script to make something easier. In hindsight, submitting a suggestion for a feature wasn't in his interest, so he had every right to say no. Larger projects have more eyes on requests and dialog is easier to kick off.

- **Pay attention to the project's style guides and code requirements**. I was able to save myself a major headache by going thoroughly through my code to make sure it was at least up to the grammatical standard set by the project owners. That way, we could focus on implementation discussion rather than form.

- **If you can't submit code, submit bug reports or provide insight in other discussions**. The documentation pages are a great way to get involved as well. Bugs need to be reported and instructions don't write themselves. Both are low-barrier entry points and can help get you established as a good-faith contributor to the community.

- **Take a big breath of humility**. I didn't submit my request thinking it was the best solution, only that it was a _potential_ solution. After I submitted, we spent a day going back and forth, tweaking items here and there before adding it to the core files. [And there was _still_ a bug we missed!](

https://github.com/LycheeOrg/Lychee/issues/120) Code is written by people, and people make mistakes, so plan on your code having mistakes. Know that you will get feedback on whatever you submit, but look at the goal and avoid taking it personally.

Last night, I got [a self-hosted photo sharing site](https://photos.ohheybrian.com) up and running on my raspberry pi 3. You can see more about that process [here](https://blog.ohheybrian.com/2018/11/forget-the-mac-mini-bring-on-the-raspberry/).

Putting it on the real, live Internet is scary. Securing a server is no small task, so I took some steps based on [these tips](https://serverfault.com/questions/212269/tips-for-securing-a-lamp-server) to make sure I don't royally get myself into trouble.

(I have a stinking feeling that posting this exposes vulnerability even more, but c'est la vie.)

To start: new user password. Easy to do using `sudo raspi-config` and going through the menus. It's big, it's unique and no, I'm not giving any hints.

As for updating the OS, I have a cron job which runs as root to update and reboot every couple of days. Lychee is [active on GitHub](https://github.com/lycheeorg/lychee) and I've starred it so I'll get updates with releases, etc. I also took some steps to separate the Apache server from the OS.

Putting a self-hosted server online requires port forwarding. That involves opening specific ports to outside traffic. I only opened the public HTTP/HTTPS ports. Several sites say to open SSH ports, but I think that's where I feel very timid. I don't plan on running anything insanely heavy which would require in-the-moment updates from somewhere remote. (There's also the fact that my school network blocks SSH traffic entirely, so there's even less reason to enable it.)

Once the ports were open, I had to find my external IP address and update my DNS Zone records on [Reclaim Hosting](https://reclaimhosting.com). By default, Comcast assigns dynamic IP addresses so they can adjust network traffic. Most tutorials encourage users to request static IPs for home servers, but others say they've used a dynamic address for years without issue. I'll see if I can work myself up to calling.

Anyways, I logged into my cPanel and added an A record for a new subdomain: [photos.ohheybrian.com](https://photos.ohheybrian.com) that pointed to my public IP address. The router sees that traffic coming in and points it at the Raspberry Pi. I tested on my phone and, hey presto, it worked.

Opening HTTP/HTTPS ports came next. It's easy to get unencrypted traffic in and out. But, like the rest of my sites, I wanted to make sure they were all SSL by default. I could't assign a Let's Encrypt certificate through Reclaim because it wasn't hosted on their servers. [The Internet came through with another good tutorial](https://www.tecmint.com/install-free-lets-encrypt-ssl-certificate-for-apache-on-debian-and-ubuntu/) and I was off.

First, I had to enable the `ssl` package on the Apache server:

```

sudo a2enmod ssl

sudo a2ensite default-ssl.conf

sudo service apache2 restart

```

Once it can accept SSL traffic, it was time to install the Let's Encrypt package, which lives on GitHub:

I then had to install the Apache2 plugin:

```

sudo apt-get install python-certbot-apache

```

From there, the entire process is automated. I moved into the install directory and then ran:

```bash

cd /usr/local/letsencrypt

sudo ./letsencrypt-auto --apache -d photos.ohheybrian.com

```

It works by verifying you own the domain and then sending the verification to the Let's Encrypt servers to generate the certificate. The default life is three months, but you can also cron-job the renewal if nothing about the site is changing.

After I was given the certification, I went to https://photos.ohheybrian.com and got a 'could not connect' error, which was curious. After more DuckDuckGoing, I realized that SSL uses a different port (duh). So, Back to the router to update port forwarding and it was finished.

There are several steps I want to take, like disaggregating accounts (one for Apache, one for MySQL, one for phpMyAdmin) so if one _happens_ to be compromised, the whole thing isn't borked.

---

_Featured image is They Call It Camel Rock flickr photo by carfull...in Wyoming shared under a Creative Commons (BY-NC-ND) license _

This weekend I decided to try and tackle [turning a Mac Mini into a server to host my own photos](https://blog.ohheybrian.com/2018/11/reviving-the-mac-mini/). Well, that turned into a real mess and I abandoned the idea after I had to disassemble the computer to retrieve a stuck recovery DVD. We went all kinds of places together, but this Mac couldn't go the distance with me this time.

So, I grabbed the semi-used Raspberry Pi 3 that was working as a wireless musicbox on our stereo (kind of) and gave it an overhaul. I removed the [PiMusicbox OS](http://www.pimusicbox.com/) and went back to a fresh Raspbian image. (Actually, I only grabbed the Lite distribution because I won't need to go to the desktop. Ever.)

I wanted a basic LAMP (Linux - Apache - MySQL - PHP) stack to run the website, specifically because the end goal was to have [Lychee](https://github.com/LycheeOrg/Lychee) installed and running on a public space. I relied on two _really good_ tutorials to help me through the process.

The first, written by a guy named Richie, is a [full-blown, step-by-step guide](https://pchelp.ricmedia.com/setup-lamp-server-raspberry-pi-3-complete-diy-guide/) on all the software setup. He even uses WordPress as his thing-to-install-at-the-end, so that's a bonus. It's written for non-technical people and isn't just a wall of command line code to type in. He had explanations (why does he always use the `-y` flag on his install commands?) and screenshots of what to expect. Really superb. If you're looking to try setting up a local server (available only on your wifi network) or have students who want to try, this is the place to start.

Once everything was going, I went to the GitHub project and used a quick command download the package into the Pi:

`wget https://github.com/LycheeOrg/Lychee/archive/master.zip`

and then unzipped the project:

`unzip master.zip`

This put all of the source files into the `/var/www` directory on the Pi, which becomes the public space. For updates, I can just use `git pull` in the directory and I'll get those updates automatically. A cron job could even take care of that, so double bonus.

I was able to go to my internal IP address and see the setup prompt. I signed into my MySQL admin and I was off.

[caption id="attachment_649" align="aligncenter" width="695"] CC0 by Brian Bennett[/caption]

CC0 by Brian Bennett[/caption]

Photos are organized by album and tags, so you can quickly search for items. I uploaded an old photo of my wife, just to see if it would accept files.

[caption id="attachment_650" align="aligncenter" width="1383"] CC0 by Brian Bennett[/caption]

CC0 by Brian Bennett[/caption]

There's another option I need to dig into that says "Upload from Link," but I'm not quite sure what that does yet. In the short term, I can start uploading photos here rather than Flickr.

The second article had some hints about how to get the server visible to the public. Your modem and router take a public IP address from your ISP and convert it into something you can use in the house. So, getting the Pi up with an IP address is easy to do and use, but only if you're at home. Making it publicly available requires two things:

- Some serious guts (this was the part I was most scared about)

- Some IP address and DNS work (potentially)

RaspberryPiGuy, who apparently works for RedHat, has a guide on [taking your server public](https://opensource.com/article/17/3/building-personal-web-server-raspberry-pi-3). I added a couple more packages to help with security, like fail2ban, which blocks an IP address after too many login attempts. I'm also going to split my network one more time so my home computers are sequestered from this little public slice. I found my public IP address on [this site](https://www.iplocation.net/find-ip-address) and then edited my router to forward traffic to the **public** IP (my house) to the **Pi** (the internal network IP).

I was able to use my phone on 4G to go directly to the public IP address and see public photos in my library, so mission accomplished for tonight. The next steps are to do some DNS forwarding so you don't have to memorize an IP address to see pictures. Some other considerations are to get a static IP so those DNS records don't get messed up, but I have to work up to that call to Comcast.

---

Featured image is Looking Through the Lens flickr photo by my friend, Alan Levine, shared into the public domain using Creative Commons Public Domain Dedication (CC0) **

My wife bought a Mac Mini toward the end of college that has been sitting in our basement pretty much since we went to Korea in 2009. I've been wanting to do something with it for a while and with Flickr changing its accounts, now seemed like a good time.

I was looking for photo sharing alternatives to Flickr, mostly because I can't afford a pro membership and I'm already over the 1000 photo limit being imposed in January. I came across [Lychee](https://github.com/LycheeOrg/Lychee/), which is essentially single-user, self-hosted photo management. (Check out their [demo site](https://ld.electerious.com/) - it's pretty impressive). My home photo collection could also stand being backed up somewhere more consistently, so my goal is to convert the mini into a self-hosted photo sharing site so I can continue to post out on the web and have a backup at home.

*cracks knuckles*

I set up in the dining room and got started.

I have to say, it was pretty amazing plugging in this computer, which hasn't seen use in nearly a decade, and watching it boot up as if I had used it yesterday.

Tonight's fun is transferring old photos from a much-neglected Mac mini and then installing Linux. pic.twitter.com/U4bbG7ShFp

—Brian E. Bennett (@bennettscience) November 15, 2018

Macs have [long-had web hosting built right in](http://www.macinstruct.com/node/112). Apache and PHP are included by default and it's easy to install MySQL for databasing. I was hoping to go the easy route and just use the default tools. LOL.

Lychee requires PHP 5.5+. The mini (late 2006 model) has PHP 4.4 and Apache 1.3 installed. No good. I started searching for [ways to upgrade both](https://jeromejaglale.com/doc/mac/upgrade_php5_tiger), but the recommended site with ported versions for old copies doesn't exist anymore.

So, I grabbed another Mac for more efficient Googling. There was also beer.

The command center is fully operational. pic.twitter.com/3ScrhI76da

—Brian E. Bennett (@bennettscience) November 15, 2018

The best option, I think, is to boot into Linux rather than OSX 10.4. So, I started researching Debian distributions that would work on older hardware. My plan was to wipe the entire hard drive and dedicate it to server resources. When I landed on the Debian wiki, they had a page specifically for older, Intel-based Macs. This line caught my eye:

The oldest Mini (macmini1,1) is typically the most problematic, due to bugs in its firmware. When booting off CD/DVD, if there is more than one El Torito boot record on the disc then the firmware gets confused. It tried to offer a choice of the boot options, but it locks up instead.

That's not good. I have two choices: I can partition the drive to prevent losing the entire machine or I can go for it and hope that the OSX recover DVD I have in the basement still works. (I'll probably partition, just to be safe.)

Luckily, two lines later, the Debian wiki notes that specific builds are now available which only include _one_ boot record, which solves the problem. [A quick 300MB download of the Mac-specific distribution](https://cdimage.debian.org/pub/debian-cd/current/amd64/iso-cd/) later and I'm ready to burn then disk image to a USB drive with [Etcher](https://github.com/balena-io/etcher).

Next steps are to actually do the Debian install.

an element is anything that needs to be learned or processed and the interactivity relates to how reliant one element is on other elements for comprehension.

Source: Element Interactivity in the Classroom

I was first introduced to working memory by [Ramsey Musallam](https://twitter.com/ramusallam) and I've pulled it out every now and then in workshops with teachers. The idea is easy to grasp, but hard to put into specific practice when planning.

I'd never heard of "element interactivity" either, but this is - hands down - one of the _most_ approachable descriptions of how to adjust planning and instruction to account for cognitive limitations with novel or complex material.

---

_Featured image "Dandelion Seeds" flickr photo by thatSandygirl https://flickr.com/photos/thatsandygirl/34111155501 shared under a Creative Commons (BY-NC-ND) license_

When taking the necessary in-depth look at Visible Learning with the eye of an expert, we find not a mighty castle but a fragile house of cards that quickly falls apart.

Source: HOW TO ENGAGE IN PSEUDOSCIENCE WITH REAL DATA: A CRITICISM OF JOHN HATTIE’S ARGUMENTS IN VISIBLE LEARNING FROM THE PERSPECTIVE OF A STATISTICIAN | Bergeron | McGill Journal of Education / Revue des sciences de l'éducation de McGill

Hattie's effect sizes are often thrown around as catch-all measurements of classroom methods. This reminds me of the learning styles discussions from several years ago. Both of these approaches have the same critical danger: reducing teaching and habits to single styles or single measures of effect is bad practice.

The idea of learning styles or effects on instruction are fine, but not when presented as scientific fact. A statistical breakdown of Hattie's effect sizes shows the clearly, as evidenced by this line:

Basically, Hattie computes averages that do not make any sense. A classic example of this type of average is: if my head is in the oven and my feet are in the freezer, on average, I’m comfortably warm.

Aggregating each category into a single effect size calculation disregards all of the other confounding variables present in a given population or individual. Learning styles has the same reductionist problem. In the mornings, reading works better for me. By the end of the day, I'm using YouTube tutorial videos for quick information. The style changes given the context and the idea of a single, best style ignores those context clues.

Use descriptors and measurements with care. Recognize the deficiencies and adjust for context as needed.

CC0 by Brian Bennett[/caption]

CC0 by Brian Bennett[/caption] CC0 by Brian Bennett[/caption]

CC0 by Brian Bennett[/caption]

Do you ever give personal help?

I can try to help here and there. If you have something specific about this method, you can leave it here as a comment so others benefit from a (possible) answer.

I’m trying to do something similar to this but I get the error: You do not have permission to call SpreadsheetApp.openByUrl

I’m reading that this can not be used in a custom function, so not understanding what I am doing differently than what you have done here.

Thanks for any help.

There are a couple possibilities. It won’t work if you’re trying to run it using an

event or if you don’t have edit permissions on the child spreadsheet. The other possibility is that the sheet might not have reauthorized you when you updated the script. To do that, sometimes I’ll write a little function that forces the OAuth window to open again and it’ll pick up the new permissions:Does this work for comments as well? I am having hard time setting up another sheet (we have two and the “master” sheet will have information that does not need to be shown on the 2nd sheet). The 2nd sheet is for clients. I have the IMPORTRANGE function working and only pulling the information I want but the comments are not coming through.

Unfortunately, no. Comments aren’t actually a “part” of the document in the sense that you can get them with IMPORTRANGE. To copy comments, you would need to use some scripting to pull them from one sheet to another.

I hope you still look at this article. I have always been a heavy user of comments in cells. When I used Excel or Open Office this was not a problem. I could copy/paste large portions of text into the comment in one cell, however, with Google sheets this does not seem to work. I can copy text out of the cell comment Ok, but when I try to paste text into the cell comment it does not work. Unfortunately I got used to Comments as a way to streamline my spreadsheets, and by now I have hundreds of spreadsheets created with Excel or Open Office that are full of comments.. Appreciate any ideas you may have.

Many thanks in advance. Rafael.